- Home

- Nvidia H200

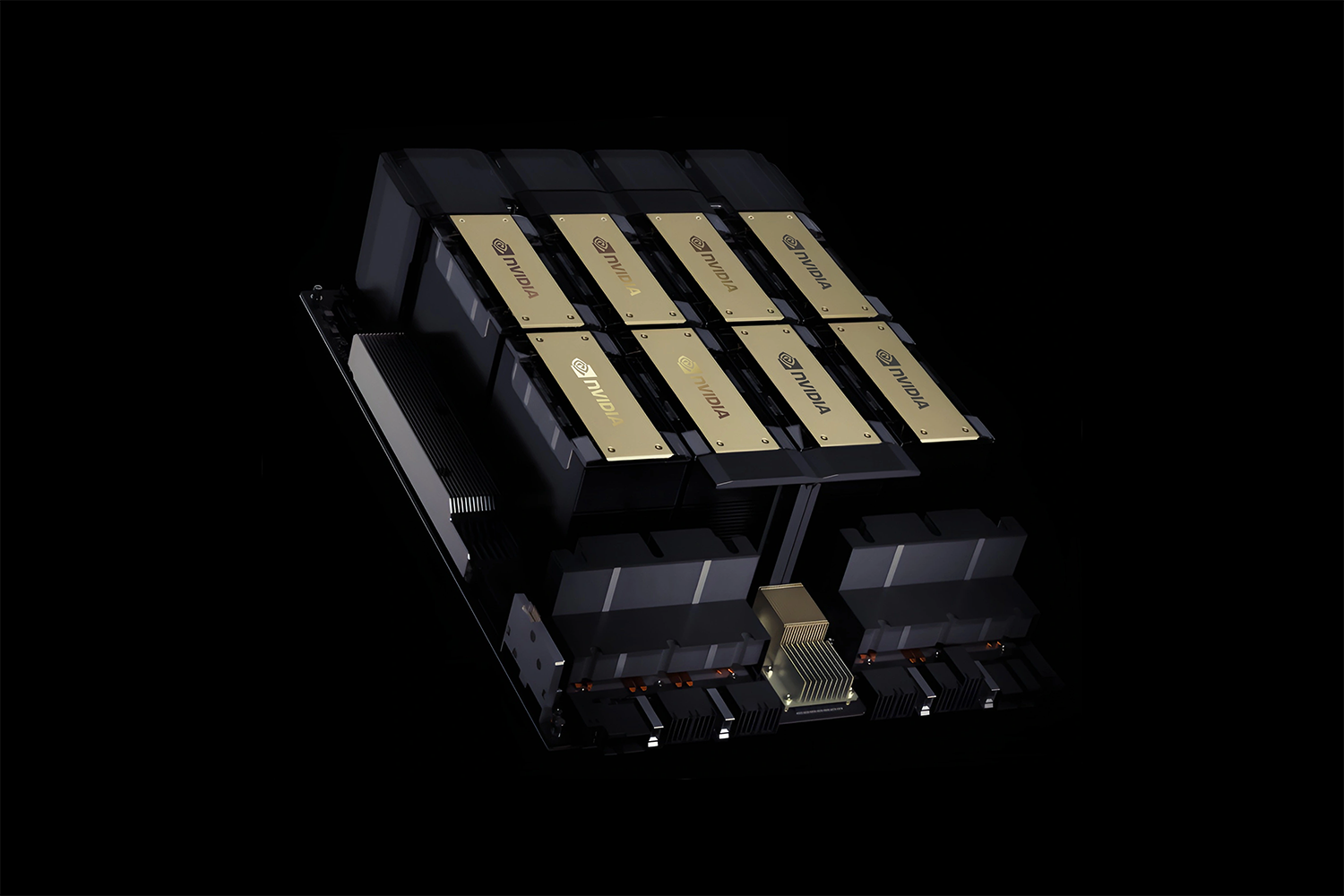

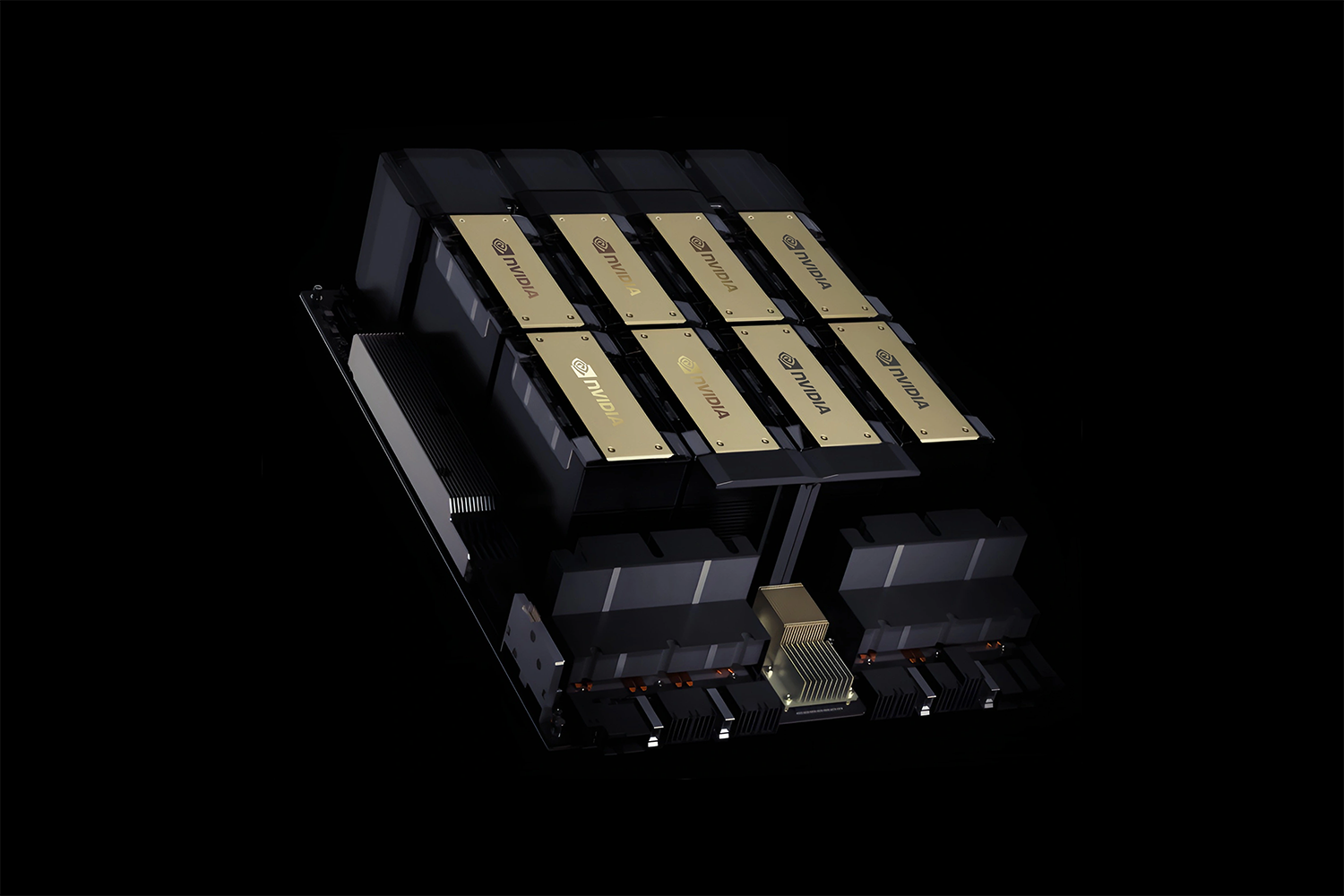

Accelerate AI Innovation with NVIDIA H200

Train with the NVIDIA® H200 GPU cluster with Quantum-2 InfiniBand networking

Get A Quote

Train with the NVIDIA® H200 GPU cluster with Quantum-2 InfiniBand networking

Get A Quote

The NVIDIA H200 Tensor Core GPU is designed to revolutionize generative AI and

high-performance computing (HPC) tasks with unprecedented performance and advanced

memory capabilities. As the first GPU equipped with HBM3e technology, the H200 delivers

larger and faster memory, enabling accelerated development of large language models

(LLMs) and breakthroughs in scientific computing for HPC workloads.

Experience cutting-edge advancements in AI and HPC with the NVIDIA H200 GPU, ideal

for demanding AI models and intensive computing applications.

The NVIDIA H200 represents a new era in AI compute, with significant improvements in memory,

bandwidth, and efficiency. By leveraging Sky Cybers’s exclusive early access to the H200,

businesses can accelerate their AI projects and maintain a competitive edge in the

fast-moving world of AI and machine learning.

Sky Cybersis now accepting reservations for H200 units, which are available now. Don’t

miss out on the opportunity to deploy the most powerful GPU resources in the world. Contact

us today to reserve access and revolutionize your AI workflows.

| GPU Architecture | NVIDIA Hopper Architecture |

| FP64 TFLOPS | 34 |

| FP64 Tensor Core TFLOPS | 67 |

| FP32 TFLOPS | 67 |

| TF32 Tensor Core TFLOPS | 989 |

| BFLOAT16 Tensor Core TFLOPS | 1,979 |

| FP16 Tensor Core | 1,979 |

| FP8 Tensor Core | 3,958 |

| INT8 Tensor Core | 3,958 TOPS |

| GPU memory | 141GB |

| GPU memory bandwidth | 4.8TB/s |

| Decoders | 7 NVDEC | 7 JPEG |

| Max thermal design power (TDP) | Up to 700W (configurable) |

| Multi-Instance GPUs | Up to 7 MIGS @ 16.5GB each |

| Form factor | SXM |

| NVLink Support | NVLink: 900GB/s PCIe Gen5: 128GB/s |